|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

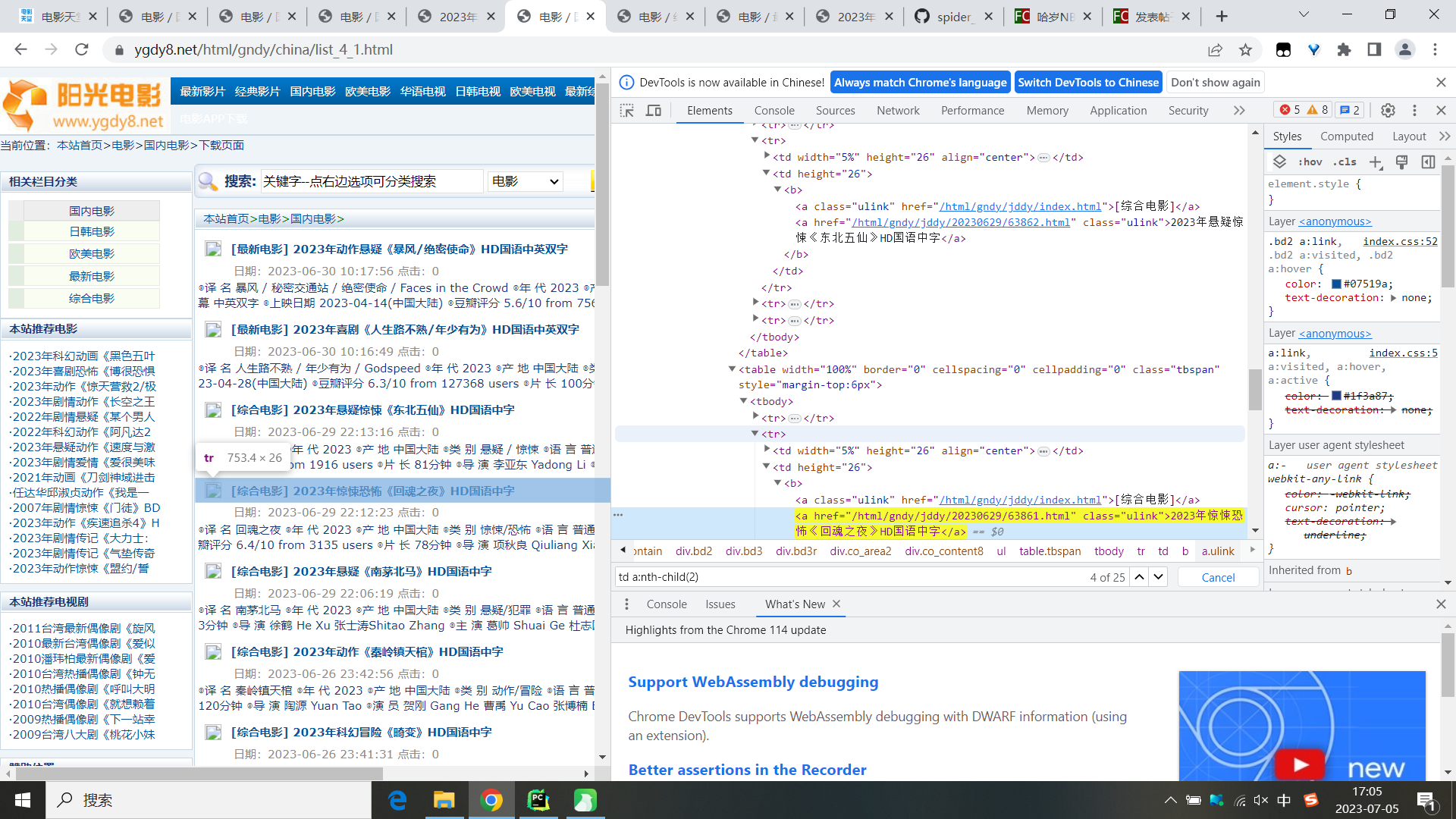

大佬们,这个选择器显示有25个href,但爬取到26个,第26个是什么呀

- import requests

- import logging

- from urllib.parse import urljoin

- from pyquery import PyQuery as pq

- index_url = 'https://www.ygdy8.net/html/gndy/china/list_4_{}.html'

- header = {

- "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

- }

- PAGE = 3

- logging.basicConfig(level=logging.INFO,format='%(asctime)s - %(levelname)s: %(message)s')

- #发起请求

- def scrape_page(url):

- logging.info('正在爬取:{}...'.format(url))

- try:

- response = requests.get(url=url,headers=header)

- if response.status_code == 200:

- return response.text

- logging.error('出现错误,爬取代码:{}'.format(response.status_code))

- except requests.RequestException:

- logging.error('爬取{}出现错误'.format(url))

- #拼接url并发起请求

- def scrape_index(page):

- url = index_url.format(page)

- return scrape_page(url)

- #解析详情页url

- def detail_url(index_html):

- details_url = []

- doc = pq(index_html)

- hrefs = doc('td a:nth-child(2)').items()

- for href in hrefs:

- urls = href.attr('href')

- url = urljoin(index_url, urls)

- details_url.append(url)

- return details_url

- def main():

- for page in range(1,2):

- index_html = scrape_index(page)

- href = detail_url(index_html)

- print(len(href))

- logging.info('detail_url:{}'.format(list(href)))

- if __name__ == '__main__':

- main()

这个是爬取到的url,

- 2023-07-05 17:01:34,079 - INFO: detail_url:['https://www.ygdy8.net/html/gndy/dyzz/20230630/63864.html', 'https://www.ygdy8.net/html/gndy/dyzz/20230630/63863.html', 'https://www.ygdy8.net/html/gndy/jddy/20230629/63862.html', 'https://www.ygdy8.net/html/gndy/jddy/20230629/63861.html', 'https://www.ygdy8.net/html/gndy/jddy/20230629/63860.html', 'https://www.ygdy8.net/html/gndy/jddy/20230626/63851.html', 'https://www.ygdy8.net/html/gndy/jddy/20230626/63850.html', 'https://www.ygdy8.net/html/gndy/dyzz/20230625/63845.html', 'https://www.ygdy8.net/html/gndy/jddy/20230624/63839.html', 'https://www.ygdy8.net/html/gndy/jddy/20230624/63838.html', 'https://www.ygdy8.net/html/gndy/jddy/20230624/63837.html', 'https://www.ygdy8.net/html/gndy/jddy/20230623/63835.html', 'https://www.ygdy8.net/html/gndy/jddy/20230623/63834.html', 'https://www.ygdy8.net/html/gndy/jddy/20230620/63827.html', 'https://www.ygdy8.net/html/gndy/jddy/20230618/63822.html', 'https://www.ygdy8.net/html/gndy/jddy/20230618/63821.html', 'https://www.ygdy8.net/html/gndy/dyzz/20230618/63820.html', 'https://www.ygdy8.net/html/gndy/dyzz/20230616/63815.html', 'https://www.ygdy8.net/html/gndy/dyzz/20230615/63813.html', 'https://www.ygdy8.net/html/gndy/jddy/20230614/63812.html', 'https://www.ygdy8.net/html/gndy/jddy/20230614/63809.html', 'https://www.ygdy8.net/html/gndy/jddy/20230611/63801.html', 'https://www.ygdy8.net/html/gndy/jddy/20230611/63800.html', 'https://www.ygdy8.net/html/gndy/dyzz/20230610/63799.html', 'https://www.ygdy8.net/html/gndy/dyzz/20230609/63797.html', 'https://www.ygdy8.netlist_4_3.html']

我刚才已经解释过了,是因为你的选择器td a:nth-child(2)匹配了页面底部的一个分页链接,而不是电影详情链接。你可以看一下源网页的源代码,就会发现有一个这样的链接:

- <td align="right"><a href="list_4_3.html">下一页</a></td>

这个链接的href属性是list_4_3.html,所以你的代码会把它拼接成’[删除中文url]https://www.ygdy8.netlist_4_3.html[/url]’,这就是你爬取到的第26个url。但这个url并不是你想要的电影详情url,所以你应该改用更精确的选择器,比如td.b a:nth-child(2)或者td.b a.ulink,来只匹配电影详情链接。这样就可以避免爬取到多余的url了。

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)